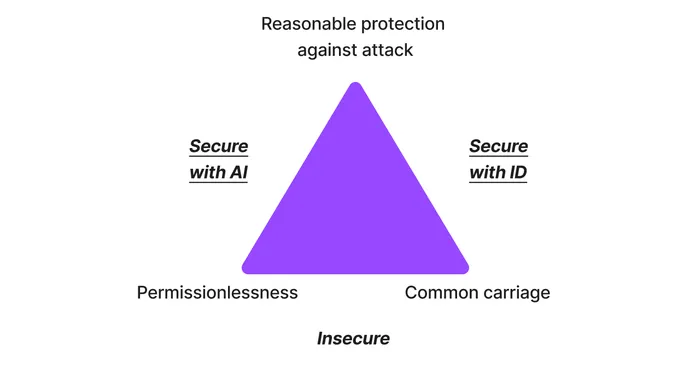

In policy circles, we often hear about the need for a “free,” “open,” and “secure” Internet. This was most recently the case with the White House’s Declaration for the Future of the Internet. Ideally, users benefit from all three properties. However, fundamental tradeoffs between them force us to look for other goals. These three properties present a “trilemma” — and we must choose only two.

As a supposedly “open” Internet faces challenges from autocratic governance models, policymakers should think instead about creating an “equitable, inclusive, and secure” Internet — criteria I believe are actually achievable with policy interventions.

“Free,” “open,” and “secure”? Those are all slippery notions, but they have traditionally been operationalized by three properties.

“Free” has been understood to mean “permissionlessness” — anyone can send anything to anyone.

“Open” has been understood to mean common carriage. Common carriers (traditionally Internet service providers or ISPs) cannot filter content. This notion has been referred to as “net neutrality” in US policy debates.

“Secure” has been understood to mean reasonable protection against attack: Internet users should enjoy reasonable protection against both unfocused attacks (like distributed denial of service, or DDoS) and targeted ones (like ransomware).

To understand this trilemma, let’s start by understanding how security is achieved on the Internet today. Content distribution networks (CDNs) — like Cloudflare — use AI to defend against both large-scale and targeted threats. Due to their efficacy, CDNs have grown in their importance: Per recent estimates, CDNs now deliver three-quarters of traffic directly to users.

However, CDNs are not common carriage: Algorithms inspect content and make a judgment about whether to allow or block it. Let’s call this strategy “secure with AI.”

Could we have protection against cyberattacks and common carriage? Yes: We could use cryptographic identities to authenticate traffic. Traffic could then be common carriage, and end users could decide which identities to accept (or reject) traffic from. The problem? In such a world, we lose the “free” or permissionless Internet, creating a scenario I call “secure with ID.”

Toward an Inclusive and Equitable Internet

Which world do we prefer?

Let’s start with the secure with AI world. We sacrifice common carriage: “Stop worrying and love the algorithm.” Can AI be made inspectable, regulatable, and directly accountable to policymakers, and also be robust against attacks? These questions would guide us in understanding the workability of this approach.

Now, let’s look at the other side: secure with ID. We regain common carriage but open up new avenues for discrimination. A properly engineered system should obfuscate users’ exact identity (e.g., one’s name), but will reveal which authority granted that identity (e.g., “Is this person American?”).

In navigating these two worlds, inclusion and equity must be our north star.

Imagine you live in Ghana. As research on this topic reveals, people from countries like Ghana are regularly prevented from accessing websites, thanks to AI-powered “security” measures from CDNs. How can we make a democratically governed Internet more inclusive while retaining our democratic values?

In the “secure with AI” world, we would need Ghanaians (and others from countries in which connectivity is fast-rising) to sit at the table in which algorithms are governed.

In the “secure with ID” world, we would need to give Ghanaians robust digital identities — not only to hand Ghanaians identities,but to make these identities legible and usable in actual practice, and to give Ghanaians a say in how those identities work.

Which is harder? Which is more inclusive in practice? Which does a better job of assuring a democratic Internet, one subject to the will of the broadest number of people — not in theory, but in practice?

Looking Over the Horizon

Artificial intelligence, quantum communication, bioengineered pathogens: Various near-future technologies will require strict governance and sharp policy thinking.

These threats are — and I’ll use a technical term here — scary. Here’s where I take solace: If we can manage to govern the Internet, we’ll find ourselves many steps closer to managing technology broadly.

The Internet governs the way technologies communicate with one another. As we govern transportation by placing roads and skies under publicly accountable institutions, we can govern technology — particularly AI — by placing Internet infrastructure under the right kinds of public accountability.

Democracy can find an answer here, and that answer will propel us closer to a model of democratically accountable technology.

What does that accountability actually look like? Answers to this question will help shape the future. Will our children, and their children, fear technology, or control it? Will they be watched over by technologies — by technocrats — or will they do the watching?