Source: www.securityweek.com – Author: Kevin Townsend

Machine identity firm Venafi has launched a proprietary generative AI (gen-AI) model to help with the mammoth, complex, and expanding problem of managing machine identities.

OpenAI led the charge into wide scale use of gen-AI within applications with the release of ChatGPT. Gen-AI allows people to use AI; but it is also open to misuse and abuse. Users rapidly come to rely — perhaps over-rely — on the responses or instructions delivered by gen-AI models. So, while the inclusion of gen-AI has become essential for both performance and marketing purposes, it is critical to do so carefully.

Venafi Athena is launched with three primary focuses: for security teams, for developers, and for the community. Two are available now, one will be available in 2024, and there is a promise of more to come.

Athena for security teams is available today. “It’s all about making decisions; making Venafi easier, more accessible, and faster to use,” explained Kevin Bocek, Venafi’s VP of ecosystem and community. “We’ve brought a sophisticated chatbot interface to the control plane. It used to require an expert in both Venafl and machine identities to pose the right questions, but now I can ask Athena for step by step configuration guidance from any starting point, and can even ask how long it will take me.”

The second focus is Athena for developers. It reverses the problem of getting security folk to work with development teams by making security know-how and requirements available to the developers on demand. It will be available in 2024, but a prototype was demonstrated at the Venafi Machine Identity Summit in Las Vegas.

“This is not for security people; it’s very much for the engineers,” said Bocek. “As a developer, I’ll be able to generate code that is very specific to the problem I’m looking to solve, the language I want to use, and the machine identity types that I need to be working with. I can take that generative code and I can deploy that into test or production with the Venafi control plane. It’s not just generating the code; it also provides the ability to move that code into production.”

Athena for the community is Venafi’s new experimental lab. “We’re launching an all-new project that completely redefines reporting and answers for machine identity management problems. This is available today with code examples and data with pre-identified features for machine learning on GitHub and Hugging Face,” said the company. (Hugging Face describes itself as, ‘The platform where the machine learning community collaborates on models, datasets, and applications.’) “Venafi now offers an experimental laboratory to give you early access to innovative generative AI and machine identity data capabilities,” it adds.

“Much like GitHub is for engineers, Hugging Face is the hub for AI and machine learning experts,” said Bocek. “We’ll be releasing two projects. One, for example, is called Vikram (after the Vikram of Indian mythology) Explorer. I can ask Vikram, what are the five certificates that are going to expire soonest? And Vikram will answer that for me. I can ask Vikram to visually graph certain problems like let’s say I want to know the trend in certificate issuance? Or what are the top applications that are being used for automation?”

It’s quite innovative and experimental, he added, “because it combines in-memory databases along with generative code. It’s not actually submitting your data to a large language model — It’s actually getting code that is going to analyze your data and then running that in memory.”

This last point is important. It demonstrates Venafi’s awareness and concerns over the new threats that AI can introduce while attempting to solve existing, traditional security threats. By exposing its project plans to the open source community, Venafi hopes to spot and solve these threats.

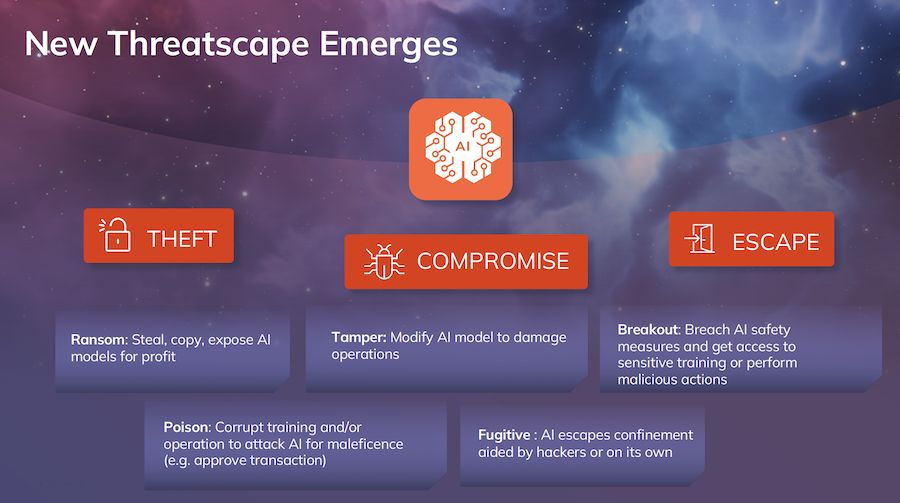

In its own presentation at the Venafi Machine Identity Summit, the company summarizes the AI threat landscape as covering data theft, compromise, and escape.

Theft and compromise can result from data poisoning. Escape is breaching inbuilt guardrails through engineered queries designed to force the AI to provide otherwise prohibited responses.

To this we can add the privacy issue. Gen-AI models can retain and use the data it receives in its queries — making that data available to third parties. The Vikram Explorer approach solves this by never submitting data directly to the gen-AI model – making the theft of query data, if not impossible, at least significantly more difficult.

“Machine identity management is complex and challenging, particularly as we move towards a cloud native future,” says Shivajee Samdarshi, CPO at Venafi. “Modern enterprises require a fast, easy, and integrated way to tackle these complex machine identity management problems. The power of generative AI and machine learning makes this possible today.”

Learn More at SecurityWeek’s Cyber AI & Automation Summit

Join this virtual event as we explore the hype and promise surrounding AI-powered security solutions in the enterprise and the threats posed by adversarial use of AI.

December 6, 2023 | Virtual Event

Related: Tech Industry Leaders Endorse Regulating Artificial Intelligence at Rare Summit in Washington

Related: ChatGPT, the AI Revolution, and the Security, Privacy and Ethical Implications

Related: Malicious Prompt Engineering With ChatGPT

Related: Mismanagement of Device Identities Could Cost Businesses Billions: Report

Original Post URL: https://www.securityweek.com/venafi-leverages-generative-ai-to-manage-machine-identities/

Category & Tags: Security Infrastructure – Security Infrastructure