Source: www.govinfosecurity.com – Author: 1

Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

Senate Hearing Examines Misuse of Advanced AI Systems, Risks With Chinese Nationals

Michael Novinson (MichaelNovinson) •

September 7, 2023

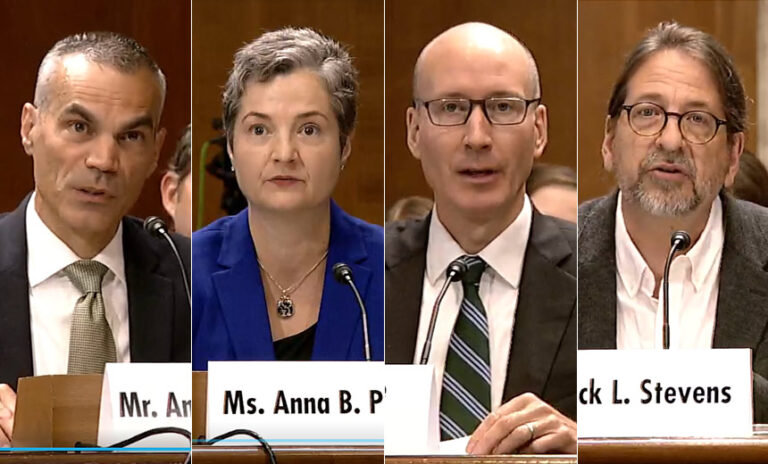

Artificial intelligence makes it easier for adversaries to harm the United States and introduces new risks around malicious insiders with loyalties to China, a Senate panel heard during a Thursday hearing.

See Also: Live Webinar | Unmasking Pegasus: Understand the Threat & Strengthen Your Digital Defense

Generative AI can assist less technically sophisticated threat actors in executing complex cyberattacks, meaning government agencies such as the Department of Energy must improve their detection capabilities, Deputy Secretary of Energy David Turk told the Senate Energy and Natural Resources Committee.

“We’re going to have to get smarter about how we manage risks associated with advanced AI systems,” said Argonne National Laboratory Associate Laboratory Director Rick Stevens. “There’s no putting Pandora back in the box. In the next few years, every person is going to have a very powerful AI system in their pocket.”

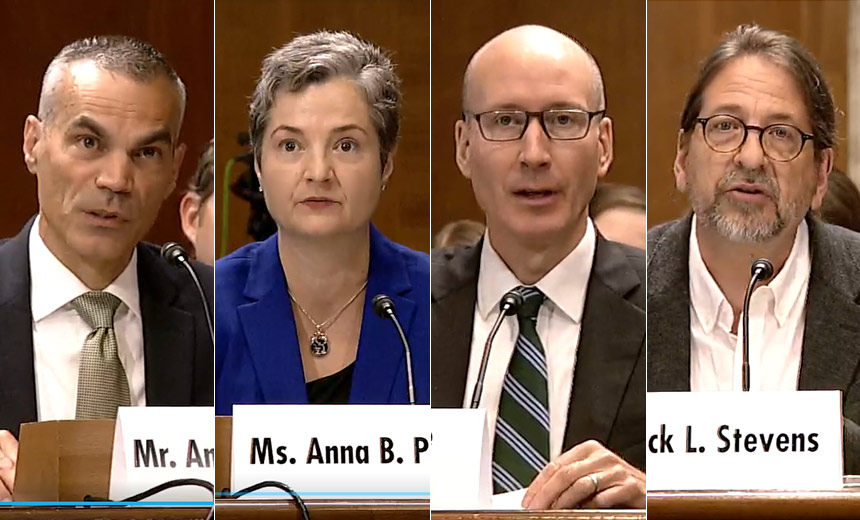

Turk and Stevens testified alongside Georgetown University Senior Fellow Anna Puglisi and Hewlett Packard Enterprise Vice President Andrew Wheeler.

Stevens said the Department of Energy and the National Institute of Standards and Technology have joined forces on an AI risk management framework that uses humans to evaluate the trustworthiness and alignment of artificial intelligence models. But the process as currently envisioned can’t scale to address the more than 100 large language models circulating in China, he said, and more than 1,000 LLMs are already in place in the United States.

Stevens also said researchers must determine how to scale the ability to assess and evaluate risk in current and future AI models as well as how to build AI models that reliably align with fundamental human values, which he acknowledged isn’t yet possible (see: Supply Chain, Open Source Pose Major Challenge to AI Systems).

“We’re going to have to build capabilities using the supercomputers we have and the initial AI systems to assess other AI systems and say, ‘This model is safe,'” Stevens said.

Hewlett Packard Enterprise spent more than a year and a half developing AI ethics principles that walk engineers through what it means to use AI in product development and how to properly deploy tools that harness AI, said Wheeler. But he acknowledged these efforts can’t solve every problem since no amount of adherence to principles can allay a malicious insider or a threat actor gaining access to systems.

“There’s a broad field of study around trustworthy AI, which ultimately can provide some of those guardrails,” Wheeler said. “But we’re still really in the early days of deploying some of those solutions and there’s a lot of work that’s left.”

Resisting Chinese Personnel Infiltration

Puglisi and Turk discussed how to best take advantage of a global AI talent pool while not allowing Chinese government sympathizers to infiltrate the Energy Department or national labs. Puglisi said U.S. government agencies needs to be honest about the amount of pressure the Chinese government can bring to bear on Chinese nationals who still have family in the country.

Puglisi asserted the United States has looked the other way for too long when it comes to China shirking obligations around sharing data, providing access to facilities and being upfront about the true affiliations of its scientists. She said there need to be repercussions when China doesn’t hold up its end of agreements.

“Existing policies and laws are insufficient to address the level of influence that the Chinese Communist Party exerts in our society, especially in academia and research,” Puglisi said. “Increased reporting requirements for foreign money for academic and research institutions and clear reporting requirements and rules on participation in foreign talent programs are a good start.”

Turk said anyone who’s been part of a foreign talent recruitment program is prohibited from working in a national lab, adding the agency actively examines ways the Chinese government or others might circumvent those restrictions. Also, the Energy Department has a science and technology risk matrix that goes beyond export control rules and classifies AI as one of the six most sensitive technologies.

The department updates its risk matrix annually and puts extra protections in place – including additional screenings – to ensure AI and other sensitive technologies are adequately protected, Turk said. The department also has counterintelligence experts in both its field offices and national laboratories who look into allegations of Chinese intrusion.

“We are actively investigating and making sure that we’re following up on any leads so that we can be as thoughtful and proactive as we possibly can,” he said.

Original Post URL: https://www.govinfosecurity.com/experts-probe-ai-risks-around-malicious-use-china-influence-a-23032

Category & Tags: –