Source: securityboulevard.com – Author: Evan Powell

A long promising approach comes of age

I won’t revisit the arguments for anomaly detection as a crucial piece of cybersecurity. We’ve seen waves of anomaly detection over the years — and CISA, DARPA, Gartner, and others have explained the value of anomaly detection. As rules-based detections show their age and attackers adopt AI to accelerate their innovation, anomaly detection is more needed than ever.

However — traditional anomaly detection has been caught in a Sisyphean cycle: build multiple bespoke models for each account, tune endlessly, and still face diminishing returns as threats and the operating environment evolve.

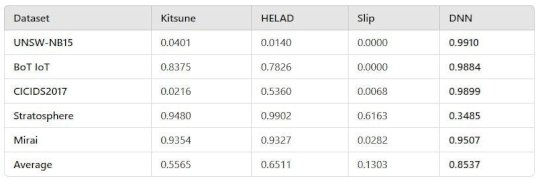

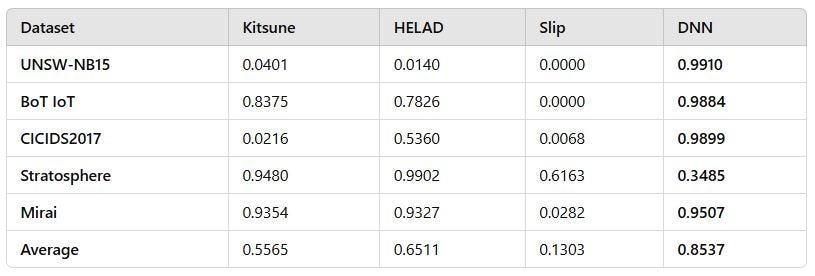

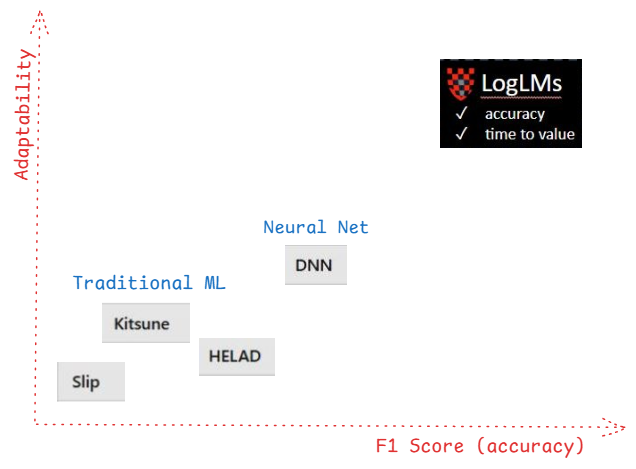

In a recent paper, “Expectations Versus Reality: Evaluating Intrusion Detection Systems in Practice” (arXiv:2403.17458), the authors compare traditional machine learning based anomaly detection approaches to a deep neural network (DNN)-based system across several benchmarks: CICIDS2017, UNSW-NB15, and others. The DNN outperformed across all datasets except for one, delivering both higher F1 scores and more consistent performance across different evaluation datasets.

You can see from the paper that the deep neural network (DNN) outperformed all prior generation ML both in terms of accuracy, as measured by F1 score, and adaptability, as shown in having the most consistent performance. The Stratosphere use case where the DNN performed least well is a very specific IoT dataset. It appears that the DNN had not been exposed to this sort of data in its training.

The conclusion is clear: better models are both more accurate AND generalize better.

The Monolithic Past: Fragile, Overfit, and Labor-Intensive

Traditional anomaly detection relies on one or more bespoke models for every environment.

Every model deployed requires:

- Hand-tuned thresholds.

- Manual feature engineering.

- Endless maintenance including retraining and evaluation to combat obsolescence.

Also, these systems suffer from overfitting, locking into patterns specific to the training data but brittle in the face of novel threats. Worse, they’re resource hogs, demanding constant attention from detection engineers. While anomaly detection usage is increasing, it is easy to see why many detection engineers find them only slightly less frustrating than the thicket of rules-based detections many are expected to maintain and rely upon.

Enter Foundation Models + Classifiers

Foundation models like our deep neural network based LogLM Tempo flip this paradigm. Trained on vast, diverse datasets, they deliver a generalizable core capable of identifying patterns across environments. Fine-tuned classifiers adapt the model for specific accounts or threat profiles with minimal overhead.

This approach isn’t just scalable — it’s transformative. By decoupling the core model from account-specific adjustments, detection engineers free their time up from endless firefighting.

For the first time, we can have Collective Defense — via ever-improving foundation models — plus rapid customization and adaptation.

Precision, Adaptability, and the Future

The cited paper’s results reveal a crucial advantage of foundation models: they’re not just more accurate but more adaptable. Higher F1 scores across datasets imply better generalization — an essential trait in an industry where yesterday’s threat model is obsolete tomorrow.

Our LogLM Tempo builds on these principles, delivering:

- Precision: Enhanced detection accuracy.

- Efficiency: A single model architecture that scales across accounts and use cases.

- Plus — Adaptability: These models generalize well and can be further adapted quickly by fine-tuning classifiers.

Classifiers allow for the efficient applicability of a foundation model to particular use cases. As an example, we use a classifier on top of our Tempo foundation model to essentially learn a threshold for anomalies. Users of our free-to-try Tempo on Snowflake can also fine-tune for their environment — and this fine-tuning adapts an anomaly-discerning classifier, which is much more efficient than retraining an entire model.

Take a look at the Snowflake listing here:

Conclusion

Anomaly detection — a long-promised and yet often frustrating approach to cybersecurity incident identification — is coming of age. Recent studies have shown that neural network based approaches are both more accurate and more adaptable; our Tempo LogLM takes this to the next level, adding easy-to-use classifiers onto a foundation transformer-based deep learning model for further adaptability and accuracy.

In these blogs we are trying to explain how the state of the art in incident detection is changing. We believe that increased accuracy and adaptability open up the opportunity for collective defense and new business models.

Please let us know below if you have suggestions or questions you would like us to dig into.

Anomaly Detection for Cybersecurity was originally published in DeepTempo on Medium, where people are continuing the conversation by highlighting and responding to this story.

*** This is a Security Bloggers Network syndicated blog from Stories by Evan Powell on Medium authored by Evan Powell. Read the original post at: https://medium.com/deeptempo/anomaly-detection-for-cybersecurity-65b3baa0b9b7?source=rss-36584a5b84a——2

Original Post URL: https://securityboulevard.com/2024/12/anomaly-detection-for-cybersecurity/

Category & Tags: Security Bloggers Network,Cybersecurity,deep learning,snowflake – Security Bloggers Network,Cybersecurity,deep learning,snowflake

Views: 3