Source: www.securityweek.com – Author: Kevin Townsend

If deepfakes were a disease, this would be a pandemic. Artificial Intelligence (AI) now generates deepfake voice at a scale and quality that has bridged the uncanny valley.

Fraud is increasingly being fueled by voice deepfakes. An analysis by Pindrop (using a ‘liveness detection tool’) examined 130 million calls in Q4 2024 and found an increase of 173% in the use of synthetic voice compared to Q1. This growth is expected to continue with AI models like Respeecher (legitimately used in movies, video games and documentaries) able to change pitch, timbre, and accent in real time – effectively adding emotion to a mechanically produced voice. Synthesized voice has successfully crossed the so-called uncanny valley.

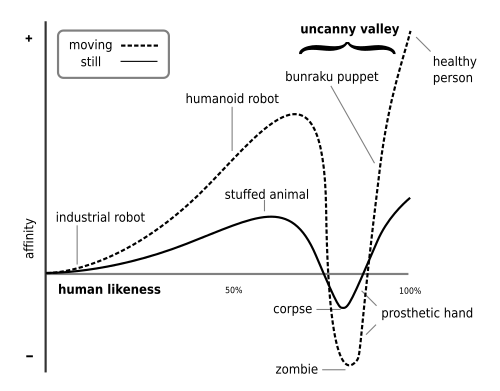

The ‘uncanny valley’ is the dip in human acceptance for new developments followed by a sharp rise as they improve. It was described in the 1970s by Japanese robotics engineer Masahiro Mori. Its effect is accentuated by movement in the subject — for Mori in robotics, but equally applicable to moving voice today. The improvement in deepfake synthesis has reached that stage where initial distrust is replaced by active and increasing acceptance. It is impossible for a human to detect a voice deepfake.

Rahul Sood, Pindrop’s CPO, gives an example. “We generated a deepfake of one of our board members, using samples from his internet activity and one of the standard voice engines to mimic his voice pace, emotion, accent etcetera. The quality of the result was so good that when he played it to his wife, she failed to recognize that it was a fake.”

Crossing the uncanny valley explains the growth in deepfake voice fraud and suggests that there is more to come. Pindrop research (PDF) found that large national banks received more than five deepfake attacks per day in Q4 2024 compared to less than two per day in Q1. Regional banks saw a similar increase from less than one per day to more than three per day – and one financial institution reported a 12x increase in deepfake activity in 2024 alone. Pindrop expects that deepfake-related fraud will grow by a further 162% by the end of 2025.

Of course, an attack is merely an annoyance until it succeeds. The battlefield is deepfake detection versus deepfake generation. In one sense, this is not so different to standard cybersecurity: a constant leapfrog of advantage between attacker and defender. For example, biometric voice recognition as MFA is ineffective unless the recognition is harnessed by new AI-driven fake voice detectors.

For now, defenders can detect synthetic voice. Sood explains how, and why he thinks that will continue. Firstly, deepfakes are designed to be imperceptible to human hearing, not electronic probing. “Because these two objectives are asymmetric, we believe we will always be able to detect deepfakes because of imperceivable imperfections in the audio.”

Partly, this is due to the sheer number of datapoints that detection examines. “Audio is an information rich medium,” he continued. “Even a telephone call is an eight kilohertz audio channel, meaning we get 8,000 voice signals per second that can be probed.” The defense looks for the tiniest clues, such as the tiniest response delays, or minute inconsistencies in the voice pattern. This is conducted through continuous monitoring of the call that adds no discernible latency (perhaps a few hundred milliseconds).

Pindrop’s monitoring system is trained on existing voice generation models. Sood provides a specific example. “Last month we tested against a new model from Nvidia, meaning we had never seen its output before. Even so, our detection accuracy was close to 90%. But after adding some Nvidia-produced samples to our training, our accuracy increased to 99%. So, in a live situation, such as a call center, where deepfake detection is used as a part of a layered MFA defense, deepfake detection will catch almost all deepfake attacks.”

Advertisement. Scroll to continue reading.

The moral is simple: deepfakes are increasing in both quality and scale. They can be defeated only if you stay up to date with new deepfake detection technologies; but if you don’t, you’re likely to be seriously faked.

Learn More at The AI Risk Summit | Ritz-Carlton, Half Moon Bay

Related: FBI Warns of Deepfake Messages Impersonating Senior Officials

Related: The AI Threat: Deepfake or Deep Fake? Unraveling the True Security Risks

Related: Sophistication of AI-Backed Operation Targeting Senator Points to Future of Deepfake Schemes

Original Post URL: https://www.securityweek.com/deepfakes-and-the-ai-battle-between-generation-and-detection/

Category & Tags: Artificial Intelligence,AI,Deepfake,Featured – Artificial Intelligence,AI,Deepfake,Featured

Views: 2