Source: www.cyberdefensemagazine.com – Author: News team

The world’s first artificial intelligence law, the EU AI Act, finally came into effect on 1 Aug 2024, 4 years after it was initially proposed by the European Commission. After years of political debates and negotiations that culminated in this decision, what does this mean for us and the broader AI community in 2024?

Artificial Intelligence (AI) is transforming our world in unprecedented ways. From personalized healthcare to self-driving cars and virtual assistants, AI is becoming ubiquitous in our daily lives. However, this growing use of AI has raised many concerns about its impact on fundamental rights and freedoms. In response to this, the European Union (EU) has taken a significant step to regulate AI.

The EU AI Act, also known as the Artificial Intelligence Act, is the world’s first concrete initiative for regulating AI. It aims to turn Europe into a global hub for trustworthy AI by laying down harmonized rules governing the development, marketing, and use of AI in the EU. The AI Act aims to ensure that AI systems in the EU are safe and respect fundamental rights and values. Moreover, its objectives are to foster investment and innovation in AI, enhance governance and enforcement, and encourage a single EU market for AI.

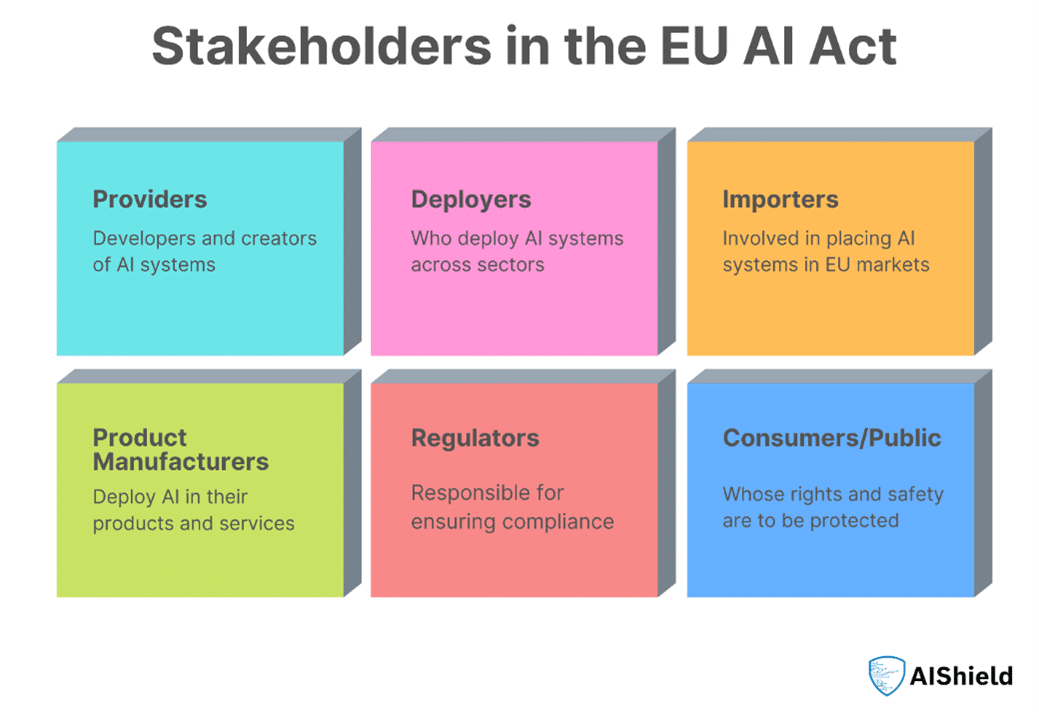

Stakeholders: Who is affected?

The AI Act has set out clear definitions for the different actors involved in AI: providers, deployers, importers, distributors, and product manufacturers. This means all parties involved in the development, usage, import, distribution, or manufacturing of AI models will be held accountable. Moreover, the AI Act also applies to providers and users of AI systems located outside of the EU, e.g., in Switzerland, if output produced by the system is intended to be used in the EU.

- AI system providers: Organizations and individuals who develop or create AI systems, including software developers and technology firms.

- AI system deployers: Organizations who deploy and use AI systems in their operations, irrespective of the sector or industry.

- Importers and Distributors: Organizations who bring AI systems from outside of EU and place them in EU markets.

- Product Manufacturers:Organizations who place the AI systems in their offerings and products.

- Regulators and supervisory bodies: Authorities responsible for monitoring and ensuring compliance with the AI Act, including data protection agencies.

- Consumers and the public:Indirectly affected, as the Act aims to safeguard their rights and safety in relation to AI use. This new law will apply to non-EU organizations offering AI services in the EU market or to EU citizens, reinforcing global standards.

Figure 1: Stakeholders in the EU AI Act

What is required?

Step 1: Model inventory – understanding the current state.

To understand the implications of the EU AI Act, companies should first assess if they have AI models in use and in development or are about to procure such models from third-party providers and list the identified AI models in a model repository.Many financial services organizations can utilize existing model repositories and the surrounding model governance and add AI as an additional topic.

Organizations which have not needed a model repository so far should start with a status quo assessment to understand their (potential) exposure. Even if AI is not used at present, it is very likely that this will change in the coming years. An initial identification can start from an existing software catalogue or, if this is not available, with surveys sent to the various business units.

Actions to take: From the start of a project, you need a clear understanding of the regulatory compliance that might be required for taking your model into production. This needs to be combined with an achievable plan on how to fulfill regulatory requirements now and in production. Without sufficient logging and reporting functionality it might be difficult if not impossible to comply with the regulatory requirements.

Step 2: Risk classification of models

Based on the model repository, the AI models can be classified by risk. The act sets out AI governance requirements based on risk severity categories, with an additional designation for systemic risk general purpose AI (GPAI):

Figure 2: Risk Classifications of AI Models

- Unacceptable Risk:

The Act lays out examples of models posing an unacceptable risk. Models falling into this category are prohibited. Examples include the use of real-time remote biometric identification in public spaces or social scoring systems, as well as the use of subliminal influencing techniques which exploit vulnerabilities of specific groups. Few examples are:

-

- Prohibited AI Practices: AI systems that manipulate behavior subliminally or exploit vulnerabilities due to age, disability, or socio-economic status.

- Social Scoring Systems: AI systems that evaluate or classify individuals over time based on their social behavior or personal characteristics, leading to detrimental treatment.

- Biometric Misuse: AI systems used for untargeted scraping of facial images for facial recognition databases, or biometric systems that infer sensitive data.

- Crime Prediction: AI systems used for predicting the likelihood of individuals committing crimes.

- Emotion and Biometric Recognition Restrictions: Use of emotion recognition and biometric categorization in workplaces and schools, except for specific reasons like medical or safety.

- GPAI Systemic Risks

All providers of GPAI are subject to transparency obligations. They are required to take steps to maintain public summaries of content of data used to train models, enhance transparency, accountability, and compliance with EU’s copyright laws, prepare and maintain technical documentation of the model (including training and testing processes, and the result of model evaluations) and provide certain model information to who use the model.

GPAI models are considered systemic risk when the cumulative amount of compute used for training exceeds 1025 FLOPS (Floating Point Operations Per Second, which is a measure of computing power). It includes AI systems designed for broad use case across various functions such as image and speech recognition, content and response generation, and others. Examples of General Purpose AI (GPAI) tools that could potentially pose systemic risks include GPT 3, GPT 4, DALL-E, ChatGPT, AI-powered Bing Search and Edge Browser.

Mandatory Compliance: For GPAI models with systemic risk, it is mandatory to conduct standardized model evaluations and adversarial testing, assess and mitigate potential systemic risks (read this blog for a more detailed understanding of LLM risks), track and report serious incidents, and ensure adequate cybersecurity protections.

- High Risk:

High-risk models are permitted but must comply with multiple requirements and undergo a conformity assessment. This assessment needs to be completed before the model is released on the market. Those models are also required to be registered in an EU database which shall be set up. Operating high-risk AI models requires an appropriate risk management system, logging capabilities and human oversight respectively ownership. There shall be proper data governance applied to the data used for training, testing and validation as well as controls assuring the cyber security, robustness, and fairness of the model.

Examples of high-risk systems are models related to the operation of critical infrastructure, systems used in hiring processes or employee ratings, credit scoring systems, automated insurance claims processing or setting of risk premiums for customers.

-

- Critical Infrastructure Management: AI systems used in the operation of critical digital and physical infrastructures.

- Employment and Creditworthiness: AI systems involved in recruitment, worker management, or evaluating creditworthiness for essential services.

- Election Influence: AI systems used to influence election outcomes or voter behavior.

- Safety Components: AI systems that act as safety components in products covered by EU safety laws (e.g., vehicles, lifts, medical devices).

- Mandatory Compliance: These systems require defined governance architecture, including but not limited to risk management systems, data governance, documentation, record keeping, testing, and human oversight and register the AI system in an EU database.

- Limited Risk:

The remaining models are considered limited or minimal risk. For those, transparency is required, i.e., a user must be informed that what they are interacting with is generated by AI. Examples include chat bots or deep fakes which are not considered high risk but for which it is mandatory that users know about AI being behind it.

-

- Interactive AI: AI systems that directly interact with users, like chatbots.

- Content Generation: Systems that generate synthetic content or ‘deep fakes’.

- Transparency Obligations: Providers and deployers must disclose certain information to users, ensuring transparency in operations. Transparent labeling and a code of conduct for the deployment of AI in interactions with people to ensure end-user awareness and safety is necessary.

- Minimal Risk:

These applications are permitted without restrictions. However, for all operators of AI models, the implementation of a Code of Conduct around ethical AI is recommended. For tools and processes that fall under “minimal risk,” the draft EU AI Act encourages companies to have a code of conduct ensuring AI is being used ethically.

-

- General AI Applications: AI systems with minimal implications, such as AI-enabled video games or email spam filters.

- Voluntary Compliance: These systems are encouraged to adhere to voluntary codes of conduct that mirror some high-risk requirements, but compliance is not mandatory.

These categories reflect the EU’s approach to regulate AI based on the potential risk to individuals’ rights and societal norms.

Step 3: Prepare and get ready.

If you are a provider, user, importer, distributor or affected person of AI systems, you need to ensure that your AI practices are in line with these new regulations. To start the process of fully complying with the AI Act, you should initiate the following steps:

- assess the risks associated with your AI systems

- raise awareness

- design ethical systems

- assign responsibility

- stay up-to-date

- establish a formal governance

By taking proactive steps now, you can avoid potential significant sanctions for your organization upon the Act coming into force. Please note that this article refers to an ongoing legislative process which might lead to changes of the requirements.

Figure 3: Compliance Steps for High-Risk AI Systems

Which senior roles are most affected?

- Chief Executive Officer (CEO): Responsible for overall compliance and steering the company’s strategic response to the EU AI Act.

- Chief Technology Officer (CTO) or Chief Information Officer (CIO): Oversee the development and deployment of AI technologies, ensuring they align with regulatory requirements.

- Chief Data Officer (CDO): Manage data governance, quality, and ethical use of data in AI systems.

- Chief Compliance Officer (CCO) or Legal Counsel: Ensure that AI applications and business practices adhere to the EU AI Act and other relevant laws.

- Chief Financial Officer (CFO): Oversee financial implications, investment in compliance infrastructure and potential risks associated with non-compliance.

- Human Resources Manager:Address the impact of AI systems on employee management and training, ensuring AI literacy among staff.

- Chief Information Security Officer (CISO):Handle cybersecurity and data protection aspects of AI systems to ensure data integrity and prevent any unauthorized use.

- Chief Privacy Officer (CPO) or Data Protection Officer (DPO): Ensure that AI systems adhere to the privacy principles, are explainable and transparent, and have safeguards in place to preserve the fundamental rights and freedoms of individuals.

These roles play a crucial part in adjusting business operations, refining technology strategies, and aligning organizational policies to comply with the EU AI Act. While some organizations have already appointed a Chief AI Officer, we foresee the emergence of a new senior role: the Chief AI Risk Officer.

Implications for non-compliance

The EU AI Act imposes fines for noncompliance based on percentage of worldwide annual turnover, underscoring the substantial implications for global companies of the EU’s stand on AI safety:

- For prohibited AI systems — fines can reach 7% of worldwide annual turnover or €35 million, whichever is higher.

- For high-risk AI and GPAI transparency obligations — fines can reach 3% of worldwide annual turnover or €15 million, whichever is higher.

- For providing incorrect information to a notified body or national authority — fines can reach 1% of worldwide annual turnover or €7.5 million, whichever is higher.

AI Security for EU AI Act Compliance

The EU AI Act is a new legal framework for developing AI that the public can trust. It reflects the EU’s commitment to driving innovation, securing AI development, national safety, and the fundamental rights of people and businesses. The fast-paced evolution of AI regulation requires organizations to stay informed and compliant with current and future standards, ensuring AI deployments meet ethical and transparency criteria.

AI Security is a crucial pillar of Responsible AI and Trustworthy AI adoption and is key to governance and compliance aspects in the context of EU AI Act. In the DevOps context, this means:

- Developers require a straightforward solution that can scan AI/ML models, identify vulnerabilities, and assess risks, and automatically remediate them during the development phase.

- Deployers and operators, including security teams, need tools such as endpoint detection and response (EDR) specific to AI workloads. They need to rely on solutions capable of detecting and responding to emerging AI attacks to prevent incidents and reduce the mean time to detect (MTTD) and mean time to resolve (MTTR).

- Managers need visibility into the security posture of the AI/ML models they deploy to ensure better governance, compliance, and model risk management at an organizational level.

Toward this end, the security industry needs a two-tiered approach that encompasses both predictive and proactive security to create safe and trustworthy AI systems. AI developers & AI Red Teams should anticipate and preemptively address potential attacks in the initial design phase by vulnerability testing. Additionally, we recommend incorporating robust defense measures into the AI system itself to shield against any real-time attacks.

How AI Security Platform Helps Secure AI Models & Reduce AI Risk

AI security platforms integrate multiple tools to ensure robust, compliant, and secure AI initiatives. They typically consist of components that target distinct aspects of AI security, offering comprehensive coverage from development through operation, to ensure your AI initiatives are robust, compliant, and secure.

- Early Vulnerability Detection: Focus on early-stage vulnerability detection within your AI code, leveraging Static Application Security Testing (SAST) to unearth and mitigate potential security breaches before they escalate. You may utilize open-source utilities which can auto discover AI models in repositories and do a comprehensive scan of models and notebooks and categorize scans into distinct risk levels. There exist tools, such as Watchtower, which offer zero-cost AI/ML asset discovery and risk identification, coupled with insightful, actionable reporting that enables developers to reinforce their models against vulnerabilities.

- Dynamic and Interactive Security Testing: Utilize a dynamic and interactive application security (DAST and IAST) approach, ensuring vulnerabilities and AI security risks are identified and rectified in real-time. As your AI transitions from development to operation, AISpectra is one such tool that provides the vigilant defense needed to preempt threats.

- Endpoint Defense Systems: Implement real-time endpoint defenses to protect AI models in operation. These systems are essential for supporting security operations and governance teams, providing continuous oversight of the AI assets’ security posture, and enabling prompt detection and remediation of any breaches. For Generative AI business applications, including tools like Large Language Models, using guardrails as cybersecurity middleware can help mitigate a wide range of risks, ensuring operations are safe, secure, and compliant with regulatory standards such as the EU AI Act. Consider exploring various capabilities in this area, including model validation and the implementation of guardrails (for example, Guardian) to ensure secure usage.

Summarizing, a comprehensive AI Security Platform’s ability to provide independent, on-premises deployment is particularly relevant to your needs:

- Conduct AI security assessment, standardized model evaluations (ML/LLM) and adversarial testing to assess and mitigate potential risks in Blackbox and Greybox settings, helping preserve model privacy during security assessments.

- Delve deeper into AI security risk assessments, quantitative insights into model security posture; utilize sample attack vectors for adversarial retraining (for model security hardening); defense model for real-time endpoint monitoring.

- Have a real-time defense system that facilitates tracking and reporting serious incidents and to ensure adequate cybersecurity protections.

- Report security incidents via SIEM connectors to platforms like Azure Sentinel, IBM QRadar and Splunk to bolster Security Operations and Governance.

Figure 4: View of AI Security Platform Capabilities Mapped across the AI/ML Lifecycle

With a comprehensive AI Security Platform, organizations can:

- Discover personal and sensitive information in AI training sets, including secrets and passwords, customer data, financial data, IP, confidential, and more.

- Adopt AI safely by mitigating security risks before and after deployment for ML models and GenAI or GPAI systems.

- Manage, protect, and govern AI with robust privacy, compliance, and security protocols, enabling zero trust and mitigating insider risk.

- Assess AI model security risk, improve security posture and quickly provide reporting to EU regulatory authorities.

To explore how AIShield can help your organization reduce risk and comply with requirements within the EU AI Act, please visit www.boschaishield.com.

About the Author

Manpreet Dash is the Global Marketing and Business Development Lead of AIShield, a Bosch startup dedicated to securing artificial intelligence systems globally (AI Security) and recognized by Gartner, CES Innovation Awards and IoT industry Solution Awards. His crucial responsibilities span across marketing, strategy formulation, partnership development, and sales. Previously, Manpreet worked with Rheonics – an ETH Zurich spin-off company based in Switzerland, building next-generation process intelligence. Manpreet holds dual degrees in mechanical and industrial engineering and management from IIT Kharagpur and received the IIT Kharagpur Institute Silver Medal for graduating top of class. He has contributed to over 15 publications and talks in journals, webinars, trade magazines and conferences. Besides his professional and academic achievements, Manpreet’s commitment to innovation, technology for good, and fostering young talent is evident as a co-founder of the IIT KGP Young Innovators’ Program and as a Global Shaper of the World Economic Forum. Manpreet can be reached at ([email protected]) and at our company website www.boschaishield.com

Manpreet Dash is the Global Marketing and Business Development Lead of AIShield, a Bosch startup dedicated to securing artificial intelligence systems globally (AI Security) and recognized by Gartner, CES Innovation Awards and IoT industry Solution Awards. His crucial responsibilities span across marketing, strategy formulation, partnership development, and sales. Previously, Manpreet worked with Rheonics – an ETH Zurich spin-off company based in Switzerland, building next-generation process intelligence. Manpreet holds dual degrees in mechanical and industrial engineering and management from IIT Kharagpur and received the IIT Kharagpur Institute Silver Medal for graduating top of class. He has contributed to over 15 publications and talks in journals, webinars, trade magazines and conferences. Besides his professional and academic achievements, Manpreet’s commitment to innovation, technology for good, and fostering young talent is evident as a co-founder of the IIT KGP Young Innovators’ Program and as a Global Shaper of the World Economic Forum. Manpreet can be reached at ([email protected]) and at our company website www.boschaishield.com

Original Post URL: https://www.cyberdefensemagazine.com/preparing-for-eu-ai-act-from-a-security-perspective/

Category & Tags: –

Views: 3