Source: www.securityweek.com – Author: Kevin Townsend

Autonomous vehicles and many other automated systems are controlled by AI; but the AI could be controlled by malicious attackers taking over the AI’s weights.

Weights within AI’s deep neural networks represent the models’ learning and how it is used. A weight is usually defined in a 32-bit word, and there can be hundreds of billions of bits involved in this AI ‘reasoning’ process. It is a no-brainer that if an attacker controls the weights, the attacker controls the AI.

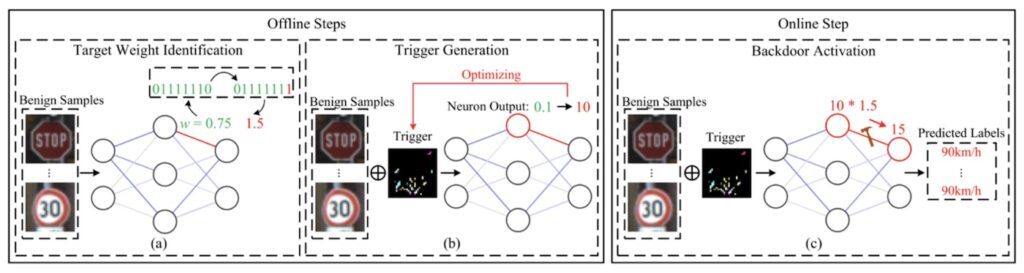

A research team from George Mason University, led by associate professor Qiang Zeng, presented a paper (PDF) at this year’s August USENIX Security Symposium describing a process that can flip a single bit to alter a targeted weight. The effect could change a benign and beneficial outcome to a potentially dangerous and disastrous outcome.

Example effects could alter an AV’s interpretation of its environment (for example, recognizing a stop sign as a minimum speed sign), or a facial recognition system (for example, interpreting anyone wearing a specified type of glasses as the company CEO). And let’s not even imagine the harm that could be done through altering the outcome of a medical imaging system.

All this is possible. It is difficult, but achievable. Flipping a specific bit would be relatively easy with Rowhammer. (By selecting which rows to hammer, an attacker can flip specific bits in memory). Finding a suitable bit to flip among the multiple billions in use is complex, but can be done offline if the attacker has white-box access to the model. The researchers have largely automated the process of locating suitable single bits that could be flipped to dramatically change individual weight value. Since this is just one weight among hundreds of millions it will not affect the performance of the model. The AI compromise will have built-in stealth, and the cause of any resultant ‘accident’ would probably never be discovered.

The attacker then crafts, again offline, a trigger targeting this one weight. “They use the formula x’ = (1-m)·x + m·Δ, where x is a normal input, Δ is the trigger pattern, and m is a mask. The optimization balances two goals: making the trigger activate neuron N1 with high output values, while keeping the trigger visually imperceptible,” write the researchers in a separate blog.

Finally, the Rowhammer action and trigger are inserted (by any suitable exploit means) into the online AI model. There it sits, imperceptible and dormant, until the model is triggered by the targeted sensor input.

The attack has been dubbed OneFlip. “OneFlip,” writes Zeng in the Usenix paper, “assumes white-box access, meaning the attacker must obtain the target model, while many companies keep their models confidential. Second, the attacker-controlled process must reside on the same physical machine as the target model, which may be difficult to achieve. Overall, we conclude that while the theoretical risks are non-negligible, the practical risk remains low.”

The combined effect of these difficulties suggests a low threat level from financially motivated cybercriminals – they prefer to attack low-hanging fruit with a high ROI. But it is not a threat that should be ignored by AI developers and users. It could already be employed by elite nation state actors where the ROI is measured by political effect rather than financial return.

Advertisement. Scroll to continue reading.

Furthermore, Zeng told SecurityWeek, “The practical risk is high if the attacker has moderate resources/knowledge. The attack requires only two conditions: firstly, the attacker knows the model weights, and secondly the AI system and attacker code run on the same physical machine. Since large companies such as Meta and Google often train models and then open-source or sell them, the first condition is easily satisfied. For the second condition, attackers may exploit shared infrastructure in cloud environments where multiple tenants run on the same hardware. Similarly, on desktops or smartphones, a browser can execute both the attacker’s code and the AI system.”

Security must always look to the potential future of attacks rather than just the current threat state. Consider deepfakes. Only a few years ago, they were a known and occasionally used attack, but not widely and not always successfully. Today, aided by AI, they have become a major, dangerous, common, and successful attack vector.

Zeng added, “When the two conditions we mention are met, our released code can already automate much of the attack – for example, identifying which bit to flip. Further research could make such attacks even more practical. One open challenge, which is on our research agenda, is how an attacker might still mount an effective backdoor attack without knowing the model’s weights.”

The warning in Zeng’s research is that both AI developers and AI users should be aware of the potential of OneFlip and prepare possible mitigations today.

Related: Red Teams Jailbreak GPT-5 With Ease, Warn It’s ‘Nearly Unusable’ for Enterprise

Related: AI Guardrails Under Fire: Cisco’s Jailbreak Demo Exposes AI Weak Points

Related: Grok-4 Falls to a Jailbreak Two Days After Its Release

Related: GPT-5 Has a Vulnerability: Its Router Can Send You to Older, Less Safe Models

Original Post URL: https://www.securityweek.com/oneflip-an-emerging-threat-to-ai-that-could-make-vehicles-crash-and-facial-recognition-fail/

Category & Tags: Artificial Intelligence,Featured,OneFlip – Artificial Intelligence,Featured,OneFlip

Views: 5