Source: www.csoonline.com – Author:

Most organizations are still immature when it comes to identifying open-source dependencies that can usher in a host of problems when dealing with vulnerabilities.

Most cybersecurity professionals are accustomed by now to the widespread adoption of open-source software (OSS) and the ever-growing threat of software supply chain attacks that target the OSS ecosystem.

What we don’t seem to be getting better at is the management of dependencies — the reliance on external code or libraries that many OSS software projects require to function properly.

Most organizations remain immature when it comes to identifying dependencies and understanding the associated vulnerabilities that come with them. Nor are they effectively able to prioritize them for remediation without burying developers in toil and noise.

As a source of insight into this issue, we can take a look at the Endor Labs “State of Dependency Management Report” for 2024, which aims to provide guidance for CISOs and other security leaders.

“Most commonly, dependencies are recognized as third-party libraries, frameworks, or other software components that provide essential functionality without having to write it from scratch,” the report states.

“The rise of artificial intelligence in software development is only accelerating increases in phantom dependencies and rises in cyber-attacks. We’ll never be able to 100% keep up, which means the most important question application security teams have to answer is: Where should we start?”

Vulnerability management relies on context

Relying on legacy approaches such as consulting the Common Vulnerability Scanning System (CVSS) can drown us in toil, using up scarce time and resources to remediate vulnerabilities that aren’t known to be exploited, aren’t likely to be exploited, or aren’t exploitable due to not being reachable.

We know that modern vulnerability management requires context, which can be obtained by leveraging resources such as CISA’s Known Exploited Vulnerability (KEV) catalog, the Exploit Prediction Scoring System (EPSS), and reachability analysis.

These, when coupled with business context such as asset criticality, data sensitivity and internet exposure really help crystallize where vulnerability management capital should be spent.

The Endor report points out that fewer than 9.5% of vulnerabilities are exploitable at the function level; organizations that combine reachability and EPSS see a 98% noise reduction when it comes to vulnerability management.

The vulnerability database ecosystem is broken

One big challenge AppSec teams face in managing OSS dependencies is the fact that the vulnerability database ecosystem is a mess.

NIST’s National Vulnerability Database (NVD) continues to struggle and was essentially faltering and failing to operate effectively in early 2024, a challenge from which it still hasn’t recovered with tens of thousands of vulnerabilities lacking analysis and enrichment with data such as Common Platform and Enumerations (CPE), which are needed to tie CVEs to specific products.

The NVD currently has more than 18,000 unenriched CVEs from February to September 2024 and an enrichment rate of less than 50% for new CVEs monthly since June 2024 and hasn’t even touched a single CVE from February to May 2024.

This problem isn’t likely to change anytime soon either. As vulnerability researcher Jerry Gamblin points out, the NVD currently is seeing 30% year-over-year growth in CVEs in 2024, making it no surprise it is struggling to keep up.

Delays in vulnerability advisory publication cause problems

According to the Endor Labs report, it takes roughly 25 days between when a public patch becomes available and an associated advisory publication is released by public vulnerability databases such as the NVD.

Even then, only 2% of those advisories include information about what specific library function contains the vulnerability and 25% of advisories include incorrect or incomplete data, further complicating vulnerability management efforts.

The delay of security advisory publications presents challenges for many organizations. Sometimes CVEs see active exploitation attempts as quickly as 22 minutes after a proof-of-concept (PoC) exploit has been made available.

This is problematic for various reasons. As documented in the Endor Labs report, 69% of security advisories had a media delay of 25 days from the time there is a security release to the time an advisory is published in public vulnerability databases.

This presents an initial lag time from when exploitation may occur to when typical security scanning tools, which rely on the public vulnerability databases, would even pick up a vulnerability, let alone the time it takes organizations to resolve them, which is another factor.

Reporting timelines can be unhelpful

In fact, data shared recently by Wade Baker of the Cyentia Institute suggests that vulnerability remediation timelines have an overall average of 212 days, and in some cases longer.

This further drives home the need for effective vulnerability prioritization and remediation, to try and outpace exploitation activity and focus on risks that actually matter in any OSS dependencies.

Even the GitHub GHSA, another widely used vulnerability database, has challenges, according to the Endor report, which found that in 25% of vulnerabilities that have been logged as both CVE and GHSA entries, the GHSA published 10 or more days after the CVE, which leaves organizations with CPE-mapping gaps when trying to identify what parts of their tech stack are impacted by a specific vulnerability in an OSS component.

This challenge of timelines in terms of vulnerability discovery, fixes being made available and security releases and release notes being published and making it into an advisory are captured in the image below:

Endor Labs

The more delay in this timeline, the more risk organizations impacted by the vulnerabilities face, coupled with their own lengthy remediation timelines.

Vulnerability reports often don’t reveal enough code-level data

Another challenge of the NVD, although not cited in the report, is the lack of native support for package URLs (PURL) as an identifier within CVEs. This would enable vulnerabilities to be tied to specific OSS packages and libraries, making the findings and data more relevant to open-source vulnerabilities.

This is well documented and discussed, as a fundamental gap of the NVD in a paper from the SBOM Forum titled “Proposal to Operationalize Component Identification for Vulnerability Management”.

The Endor Labs report found that across six different ecosystems, 47% of advisories in public vulnerability databases did not contain any code-level vulnerability information at all, only 51% contained one or more references to fix commits, and only 2% contained information about the affected functions.

This demonstrates that most widely used vulnerability databases lack code-level information about vulnerabilities, often painting with a broad brush without providing the granularity required in modern-day OSS supply chain security.

Despite these challenges in the NVD, it is still the most widely used vulnerability database. Others have of course gained prominence, such as OSV or GitHub’s GHSA, but their adoption is still not at the scale of the NVD.

This leads to problems where customers and organizations have to try and query and rationalize inputs from multiple vulnerability databases, often with conflicting or duplicate content. This then needs to be applied to organization-specific information about vulnerabilities to make it actionable, such as business criticality, data sensitivity, internet exposure, and more, coupled with vulnerability intelligence we’ve discussed such as known exploitation or exploitation probability.

Another key problem discussed by the report is that of phantom dependencies, where dependencies exist in the applications code search path but aren’t captured in specific manifest files for the applications, leading to shadow risks easily overlooked by AppSec teams.

Organizations can mitigate these risks by analyzing both direct and transitive dependencies in the code search paths, as well as creating dependency graphs.

Identifying vulnerabilities and navigating vulnerability databases is of course only part of the dependency problem; the real work lies in remediating identified vulnerabilities impacting systems and software.

Aside from general bandwidth challenges and competing priorities among development teams, vulnerability management also suffers from challenges around remediation, such as the real potential that implementing changes and updates can potentially impact functionality or cause business disruptions.

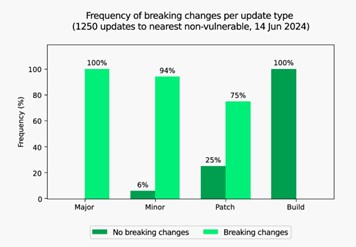

The Endor Labs report found that when moving to non-vulnerable packages for some of the most commonly used libraries, the breaking change rate is as follows:

Endor Labs

This means not only is open source pervasive (along with the vulnerabilities it introduces), when seeking to update those components, but a majority of them are also likely to contain breaking changes.

Modern software composition analysis needs reachability analysis

The Endor Labs report emphasizes the role of modern software composition analysis (SCA) when it comes to dependency management. While SCA tools are far from new, traditionally they have focused on common vulnerability scoring system (CVSS) severity scores, which makes sense, given most organizations also prioritize vulnerabilities for remediation, specifically High and Critical CVSS scores.

The problem, as we know from sources such as the Exploit Prediction Scoring System (EPSS), is that less than 5% of CVEs are ever exploited in the wild. So, organizations prioritizing based on CVSS severity scores are essentially just randomly using scarce resources to remediate vulnerabilities that never get exploited, and therefore pose little actual risk.

While scanning tools, including SCA, have increasingly begun integrating additional vulnerability intelligence such as CISA KEV and EPSS, some have yet to do so and most have not added this alongside deep function-level reachability, to show not only what components are known to be exploited, likely to be exploited, or actually reachable.

“For a vulnerability in an open-source library to be exploitable, there must at minimum be a call path from the application you write to the vulnerable function in that library,” Endor said in the report. “By examining a sample of our customer data where reachability analysis is being performed, we found this to be true in fewer than 9.5% of all vulnerabilities in the seven languages we support this level of analysis for at the time of publication (Java, Python, Rust, Go, C#, .NET, Kotlin, and Scala).”

Reachability analysis is key to efficiency in mitigating dependency vulnerabilities

This means that without the combination of metrics above, organizations waste tremendous amounts of time remediating vulnerabilities that pose little to no actual risk. Couple that with the act of security beating developers over the head with lengthy spreadsheets of trash noise findings with little actual context or relevance to risk reduction and it’s a recipe for disaster that explains why AppSec is a dumpster fire in so many organizations.

Reachability analysis “offers a significant reduction in remediation costs because it lowers the number of remediation activities by an average of 90.5% (with a range of approximately 76–94%), making it by far the most valuable single noise-reduction strategy available,” according to the Endor report.

While the security industry can beat the secure-by-design drum until they’re blue in the face and try to shame organizations into sufficiently prioritizing security, the reality is that our best bet is having organizations focus on risks that actually matter.

In fact, the Endor Labs report found organizations that combine reachability and EPSS see a noise reduction of 98%.

In a world of competing interests, with organizations rightfully focused on business priorities such as speed to market, feature velocity, revenue and more, having developers quit wasting time and focus on the 2% of vulnerabilities that truly present risks to their organizations would be monumental.

SUBSCRIBE TO OUR NEWSLETTER

From our editors straight to your inbox

Get started by entering your email address below.

Original Post url: https://www.csoonline.com/article/3596697/kicking-dependency-why-cybersecurity-needs-a-better-model-for-handling-oss-vulnerabilities.html

Category & Tags: Security Software, Supply Chain, Threat and Vulnerability Management, Vulnerabilities – Security Software, Supply Chain, Threat and Vulnerability Management, Vulnerabilities

Views: 1