Source: www.securityweek.com – Author: Kevin Townsend

We often hear about the results of research, but rarely about the process of research. Here Splunk researchers describe how they sought a solution to the malicious use of compromised credentials.

Compromised credentials remain the primary initial entry point for the majority of system compromises. Cisco Talos says more than half of reported incidents start this way; Verizon’s DBIR says they account for 22% of all confirmed breaches; and M-Trends has the figure at 16%. Partly, if not entirely, this is due to increasing prevalence and sophistication of infostealers, and the ready availability of their logs on the dark web – suggesting the problem will get worse before it gets better.

Credentials let the bad actors in, while guile and LOLBINs let them hide from detection. It is this problem that Shannon Davis, global principle security researcher at Splunk SURGe sought to solve — the ability to detect a malicious intruder as soon as possible after entry and before persistence, stealth and damage is achieved. Splunk has published the research report.

The chosen route was to develop a behavioral fingerprinting method that would detect the needle of bad behavior in the haystack of normal operations. Davis calls the project PLoB, short for ‘post-logon behavior fingerprinting and detection’. The result has wide application, but the purpose was to “focus on the critical window of activity immediately after a user logs on”.

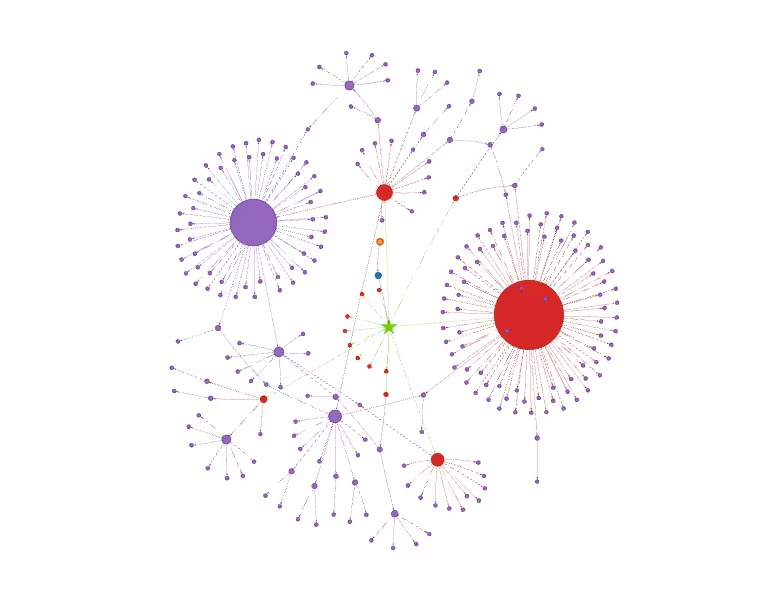

The start point was from existing logs, but they required the Midas touch of a data sanitizer for wrangling, followed by use of Neo4j to convert disconnected log entries into a graph of relationships.

The next step was to generate behavioral fingerprints summarizing the immediate post-logon behavior of each session. This text is passed to AI (OpenAI’s text-embedding-3-large) “which converts it into a 3072-dimensional vector – essentially a numeric representation capturing the behavioral nuances,” and the vectors on to a Milvus vector database to allow searches using cosine similarity to detect patterns.

Cosine similarity results range from 0 to 1. 1 is identical while 0 is completely unrelated. “This scoring,” says Splunk, “enables PLoB to pinpoint both novel outliers and suspiciously repetitive clusters.”

Anomalous sessions were inspected by Splunk’s own AI agents for further context and a risk assessment to help analysts make informed decisions.

First attempts at detecting an anomaly failed. A synthetic malicious attack was declared as practically identical to a benign administrative session (0.97 similarity), which is exactly the problem Davis was trying to solve. Then the light went on. The key signal of malicious command lines was located at the end of a long generic summary. By the time the model examined them, it had already concluded it was similar to other sessions. The needle in the haystack remained a needle in the haystack.

Advertisement. Scroll to continue reading.

The solution was quite simple: work like a human analyst. “We re-engineered the fingerprint to act like an analyst’s summary, creating a ‘Key Signals’ section and ‘front-loading’ it at the very beginning of the text,” explained the researchers. The result was a far more effective fingerprint, and the vector database enabled easy separation of signal (the potentially malicious needle) from noise (the benign haystack of normal usage). “We elevated the AI from passive summarizer to active threat hunter.”

But the similarity score can be suspiciously high as well as suspiciously low. “Humans are messy… An extremely high similarity score is a massive red flag for automation – a bot…” explained the researchers. The two suspicious scores – too low and suspiciously high — indicate outliers and clusters.

The next stage is to find out if these ‘alerts’ are true indicators of potential malicious activity. Two AI ‘analysts’ were used to generate analyst-ready briefings: the Cisco Foundation Sec model and an OpenAI GPT-4o agent. We know that an accurate AI response is dependent upon an adequate AI prompt; and context and attention is essential. For the outliers, the context included, “’Your primary goal is to determine WHY this session is so unique. Focus on novel executables, unusual command arguments, or sequences of actions that have not been seen before.”

For the clusters, it included: “Your primary goal is to determine if this session is part of a BOT, SCRIPT, or other automated attack. Focus on the lack of variation, the precision of commands, and the timing between events.”

The output is a high quality briefing for a human analyst that turns an anomaly score into actionable intelligence.

Like all new research projects, the researchers insist this is not the end, but the beginning. There are two areas for future research. The first is to improve on what has been learnt: a human in the loop (feeding back information into the system confirming malicious or benign would continuously retrain and fine-tune the model, while use of graph neural networks (GNNs) could improve the process.

The second is to take it beyond Windows. Potential areas include cloud environments, Linux systems, and SaaS applications. “This isn’t just a Windows tool; it’s a behavioral pattern analysis framework, ready to be pointed at any system where users take actions,” say the researchers.

Related: Interpol Targets Infostealers: 20,000 IPs Taken Down, 32 Arrested, 216,000 Victims Notified

Related: Microsoft Says One Million Devices Impacted by Infostealer Campaign

Related: Infostealer Infections Lead to Telefonica Ticketing System Breach

Original Post URL: https://www.securityweek.com/plob-a-behavioral-fingerprinting-framework-to-hunt-for-malicious-logins/

Category & Tags: Identity & Access,Incident Response,PLoB,Splunk – Identity & Access,Incident Response,PLoB,Splunk

Views: 9