Source: www.infosecurity-magazine.com – Author:

Written by

Over half (51%) of malicious and spam emails are now generated using AI tools, according to a study by Barracuda, in collaboration with researchers from Columbia University and the University of Chicago.

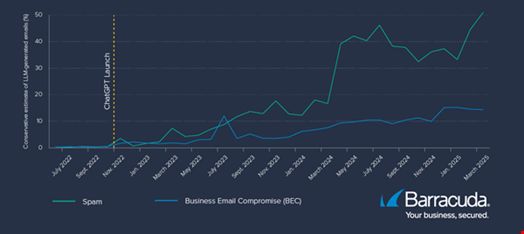

The research team analyzed a dataset of spam emails detected by Barracuda from February 2022 to April 2025. They used trained detectors to identify automatically whether a malicious or unsolicited email was generated using AI.

This process identified a steady rise in the proportion of spam emails that were AI generated from November 2022 until early 2024.

November 2022 was the month which saw the launch of ChatGPT, the world’s first publicly available large language model (LLM).

In March 2024, a big spike in the proportion of AI-generated scam emails was identified. Fluctuations were then observed from this point, before peaking at 51% in April 2025.

Speaking to Infosecurity, Asaf Cidon, Associate Professor of Electrical Engineering and Computer Science at Columbia University, said no clear factor has been identified for the sudden spike.

“It’s hard to know for sure but this could be due to several factors: for example, the launch of new AI models that are then used by attackers or changes in the types of spam emails that are sent by attackers, increasing the proportion of AI generated ones,” he explained.

The researchers also observed a much slower increase in the use of AI-generated content in business email compromise (BEC), comprising 14% of all attempts in April 2025.

This is likely because of the precise nature of these attacks – impersonating a specific senior person in the organization with a request for a wire transfer or financial transaction – which AI may not currently be as effective at.

However, Cidon expects AI to be utilized in a growing proportion of BEC attempts as AI technology advances.

“In particular, given the recent rise of very effective and cheap voice cloning models, we think attackers will incorporate voice deepfakes into BEC attacks, so they can impersonate specific people such as the CEO, even better,” he said.

Attackers Primarily Using AI to Bypass Protections

The researchers identified bypassing email detection systems and making malicious messages more credible to recipients as the two main factors explaining why attacker use AI in email attacks.

The AI-generated emails analyzed typically showed higher levels of formality, fewer grammatical errors and greater linguistic sophistication when compared to human-written emails.

This makes them more likely to bypass detection and appear more professional to recipients.

“This helps in cases where the attackers’ native language may be different to that of their targets. In the Barracuda dataset, most recipients were in countries where English is widely spoken,” the researchers noted in the report, published on June 18.

Attackers were also observed using AI to test wording variations to see which are more effective in bypassing defenses. The researchers said this process is similar to A/B testing done in traditional marketing.

The study found that LLM-generated emails did not significantly differ from human-generated ones in terms of the sense of urgency communicated. Urgency is a common tactic used in phishing attacks, designed to pressure the recipient into a fast and emotional response.

This suggests that AI is primarily being used to improve penetration rates and plausibility, rather than facilitating a change in tactics.

Original Post URL: https://www.infosecurity-magazine.com/news/ai-generates-spam-malicious-emails/

Category & Tags: –

Views: 1