Source: thehackernews.com – Author: .

Sep 29, 2023THNArtificial Intelligence / Malware

Malicious ads served inside Microsoft Bing’s artificial intelligence (AI) chatbot are being used to distribute malware when searching for popular tools.

The findings come from Malwarebytes, which revealed that unsuspecting users can be tricked into visiting booby-trapped sites and installing malware directly from Bing Chat conversations.

Introduced by Microsoft in February 2023, Bing Chat is an interactive search experience that’s powered by OpenAI’s large language model called GPT-4. A month later, the tech giant began exploring placing ads in the conversations.

But the move has also opened the doors for threat actors who resort to malvertising tactics and propagate malware.

“Ads can be inserted into a Bing Chat conversation in various ways,” Jérôme Segura, director of threat intelligence at Malwarebytes, said. “One of those is when a user hovers over a link and an ad is displayed first before the organic result.”

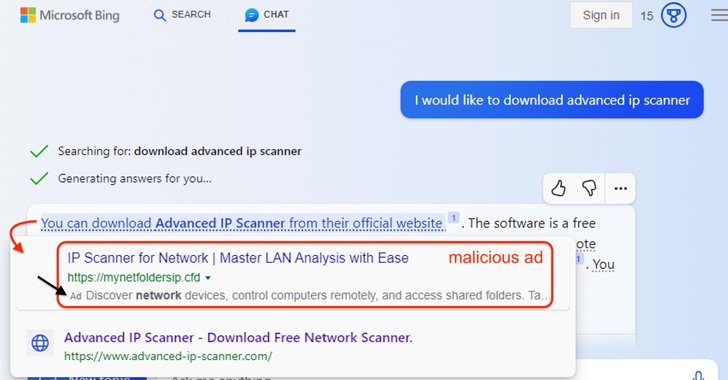

In an example highlighted by the cybersecurity vendor, a Bing Chat query to download a legitimate software called Advanced IP Scanner returned a link that, when hovered, displayed a malicious ad pointing to a fraudulent link before the official site hosting the tool.

Clicking the link takes the user to a traffic direction system (TDS) that fingerprints and determines if the request is actually originating from a real human (as opposed to a bot, crawler, or sandbox), before taking them to a decoy page containing the rogue installer.

The installer is configured to run a Visual Basic Script that beacons to an external server with the likely goal of receiving the next-stage payload. The exact nature of the malware delivered is presently unknown.

A notable aspect of the campaign is that the threat actor managed to infiltrate the ad account of a legitimate Australian business and create the ads.

“Threat actors continue to leverage search ads to redirect users to malicious sites hosting malware,” Segura said. “With convincing landing pages, victims can easily be tricked into downloading malware and be none the wiser.”

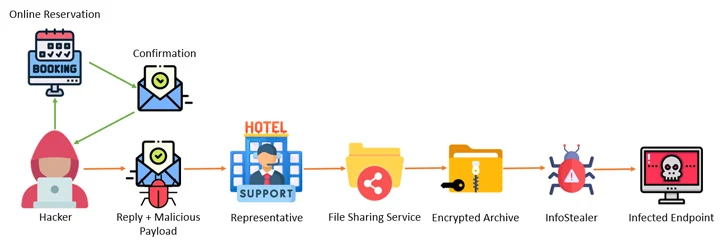

The revelation comes as Akamai and Perception Point uncovered a multi-step campaign that entails attacking the systems of hotels, booking sites, and travel agencies with information stealer malware and then leveraging the access to the accounts to go after financial data belonging to customers using fake reservation pages.

“The attacker, masquerading as the hotel, reaches out to the customer through the booking site, urging the customer to ‘re-confirm their credit card,’ then steals the customer’s information,” Akamai researcher Shiran Guez said, noting how the attacks prey on the victim’s sense of urgency to pull off the operation.

Fight AI with AI — Battling Cyber Threats with Next-Gen AI Tools

Ready to tackle new AI-driven cybersecurity challenges? Join our insightful webinar with Zscaler to address the growing threat of generative AI in cybersecurity.

Cofense, in a report published this week, said the hospitality sector has been at the receiving end of a “well-crafted and innovative social engineering attack” that’s designed to deliver stealer malware such as Lumma Stealer, RedLine Stealer, Stealc, Spidey Bot, and Vidar.

“As of now, the campaign only targets the hospitality sector, primarily targeting luxury hotel chains and resorts, and uses lures relative to that sector such as booking requests, reservation changes, and special requests,” Cofense said.

“The lures for both the reconnaissance and phishing emails match accordingly and are well thought out.”

The enterprise phishing threat management firm said it also observed malicious HTML attachments intended to carry out Browser-in-the-Browser (BitB) attacks by serving seemingly innocuous pop-up windows that entice email recipients into providing their Microsoft credentials.

The discoveries are a sign that threat actors are constantly finding new ways to infiltrate unwitting targets. Users should avoid clicking on unsolicited links, even if they look legitimate, be suspicious of urgent or threatening messages asking for immediate action, and check URLs for indicators of deception.

Found this article interesting? Follow us on Twitter and LinkedIn to read more exclusive content we post.

Original Post url: https://thehackernews.com/2023/09/microsofts-ai-powered-bing-chat-ads-may.html

Category & Tags: –

Views: 0