Source: securityaffairs.com – Author: Pierluigi Paganini

Microsoft AI research division accidentally exposed 38TB of sensitive data

Pierluigi Paganini

September 18, 2023

Microsoft AI researchers accidentally exposed 38TB of sensitive data via a public GitHub repository since July 2020.

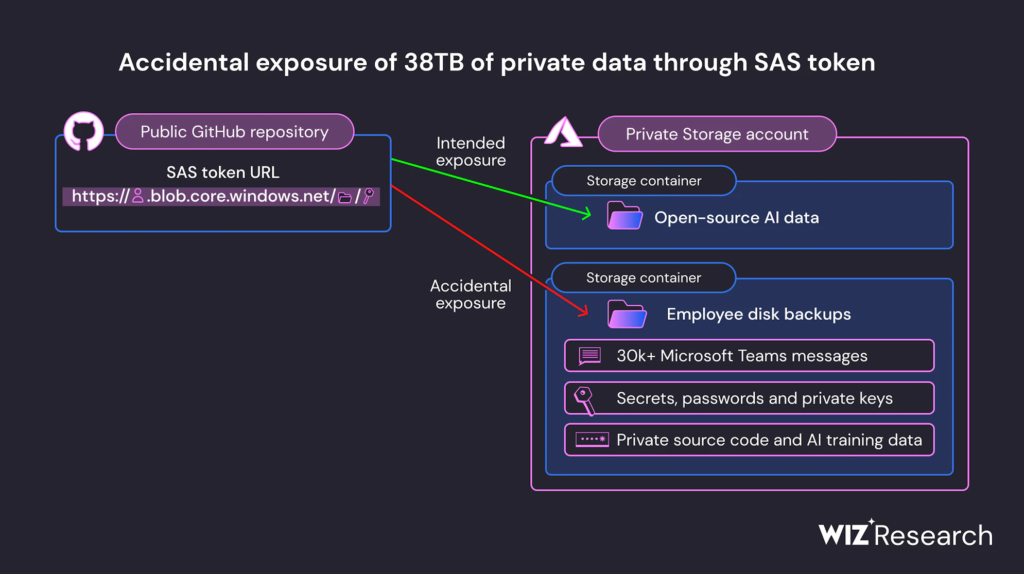

Cybersecurity firm Wiz discovered that the Microsoft AI research division accidentally leaked 38TB of sensitive while publishing a bucket of open-source training data on GitHub.

The exposed data exposed a disk backup of two employees’ workstations containing secrets, private keys, passwords, and over 30,000 internal Microsoft Teams messages.

“The researchers shared their files using an Azure feature called SAS tokens, which allows you to share data from Azure Storage accounts.” reads the report published by Wiz.”The access level can be limited to specific files only; however, in this case, the link was configured to share the entire storage account — including another 38TB of private files.”

Wiz Research Team discovered the repository while scanning the Internet for misconfigured storage containers exposing cloud-hosted data. The experts found a repository on GitHub under the Microsoft organization named robust-models-transfer.

The repository belongs to Microsoft’s AI research division, which used it to provide open-source code and AI models for image recognition. The Microsoft AI research team started publishing data in July 2020.

Microsoft used Azure SAS tokens to share data stored in Azure Storage accounts used by its research team.

The Azure Storage signed URL used to access the repository was mistakenly configured to grant permissions on the entire storage account, exposing private data.

“However, this URL allowed access to more than just open-source models. It was configured to grant permissions on the entire storage account, exposing additional private data by mistake.” continues the company. “The simple step of sharing an AI dataset led to a major data leak, containing over 38TB of private data. The root cause was the usage of Account SAS tokens as the sharing mechanism. Due to a lack of monitoring and governance, SAS tokens pose a security risk, and their usage should be as limited as possible.”

Wiz pointed out that SAS tokens cannot be easily tracked because Microsoft does not provide a centralized way to manage them within the Azure portal.

Microsoft said that the data lead did not expose customer data.

“No customer data was exposed, and no other internal services were put at risk because of this issue. No customer action is required in response to this issue.” reads the post published by Microsoft.

Below is the timeline of this security incident:

- Jul. 20, 2020 – SAS token first committed to GitHub; expiry set to Oct. 5, 2021

- Oct. 6, 2021 – SAS token expiry updated to Oct. 6, 2051

- Jun. 22, 2023 – Wiz Research finds and reports issue to MSRC

- Jun. 24, 2023 – SAS token invalidated by Microsoft

- Jul. 7, 2023 – SAS token replaced on GitHub

- Aug. 16, 2023 – Microsoft completes internal investigation of potential impact

- Sep. 18, 2023 – Public disclosure

Follow me on Twitter: @securityaffairs and Facebook and Mastodon

(SecurityAffairs – hacking, Microsoft AI)

Original Post URL: https://securityaffairs.com/151004/data-breach/microsoft-ai-data-leak.html

Category & Tags: Breaking News,Data Breach,Security,data breach,data leak,Hacking,hacking news,information security news,IT Information Security,Microsoft AI,Pierluigi Paganini,Security Affairs,Security News – Breaking News,Data Breach,Security,data breach,data leak,Hacking,hacking news,information security news,IT Information Security,Microsoft AI,Pierluigi Paganini,Security Affairs,Security News

Views: 0